So i've been using a script since the Jamf jump start which has been working a treat but recently its become sporadic and randomly works when it feels?!

I found that it adds a keychain entry for each drive and removing these was kicking it back in but now this isn't fixing it either.

Script is:

!/bin/bash

if [ "$3" == "" ]; then

user=/bin/ls -l /dev/console | /usr/bin/awk '{ print $3 }'

else

user=$3

fi

echo User logging in is $user

sudo -u $user /usr/local/jamf/bin/jamf mount -server "$4" -share "$5" -type "$6" -username "$user" -visible &

exit 0

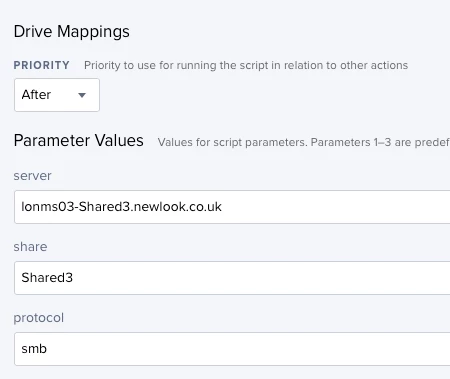

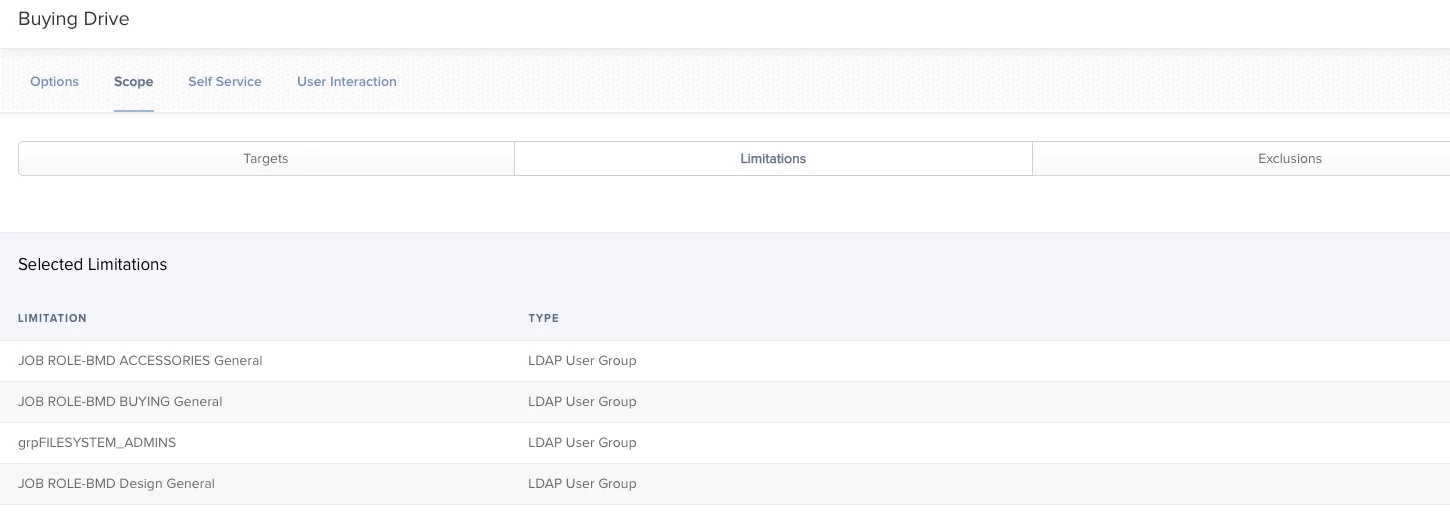

So I have separate policies to map each drive and these run based on AD memberships. (See attached screens)

Mapping the drives manually works fine so theres nothing wrong with users permissions or their group memberships its just these policies seem to pick and choose when they run and who they run for?!?!

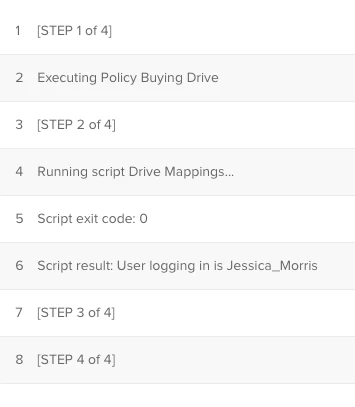

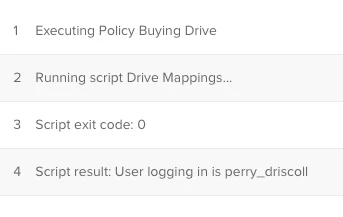

Policie logs do show some differences but they all say completed and show no errors.(see attached screens)

Any ideas or changes to the script you can think i need?