As I wait for a response for JAMF support - I'm in need of help and a sanity check surrounding the Database and JDS

Ultimately, yesterday I uploaded a 7.14GB dmg... twice.. First time using Casper Admin - it took a while but when it was done, all was good - until I relaunched Casper Admin and found the file in red. I checked my JDS via CLI and sure enough, no file to be found. I decided to re-upload the file using the JSS interface. It took some time as well and sure enough, it still did not appear in the CasperShare folder in CLI.

After some research, I found that the JDS needs a /tmp folder larger than what we typically allocate - fine, issue resolved using a symlink to the /data partition.

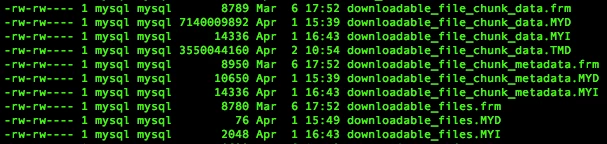

Now however I've noticed my Database is unresponsive - can't stop MySQL or login to MySQL. Each JSS server is stuck at 'Initializing Database' after a quick restart. Browsing the mysql/data/jamfsoftware in CLI, i've found some large files - about the size of my DMG.... (Picture attached.)

The first question that comes to mind is WTF are those doing there, but putting two and two together, I imagine uploaded files traverse the DB before hitting the JDS - and because my JDS couldn't replicate, they were stuck there - and because of their size, our /data partition is now full - and because our /data partition is full, MySQL has become unresponsive...

help...