Here is a script I wrote ages ago for Self Service that creates a txt file of the disk usage stats on the machine and opens it in Safari.

Maybe it's useful to you, it can take a little time to run on a very full traditional HDD but it's pretty quick on an SSD and you could probably remove a few du commands that weren't relevant to what your trying to capture.

#!/bin/bash

CURRENT_USER=$(ls -l /dev/console | awk '{print $3}' | head -n 1)

if [ "$CURRENT_USER" ]; then

FILE_NAME=$(echo ${CURRENT_USER}-$(hostname)-sizes.txt)

echo ===== Disk usage report for ${CURRENT_USER} on $(hostname) ===== > /Users/$CURRENT_USER/$FILE_NAME

echo >> /Users/$CURRENT_USER/$FILE_NAME

echo ===== Disk usage for top 10 users in MB ===== >> /Users/$CURRENT_USER/$FILE_NAME

du -m -d -x 0 /Users/* | sort -nr | head -n10 >> /Users/$CURRENT_USER/$FILE_NAME

echo >> /Users/$CURRENT_USER/$FILE_NAME

echo ===== Disk usage for top 10 folders in ${CURRENT_USER} showing the top 5 items in each folder in MB ===== >> /Users/$CURRENT_USER/$FILE_NAME

echo >> /Users/$CURRENT_USER/$FILE_NAME

for FOLDER in $(du -m -d -x 0 /Users/$CURRENT_USER/* | sort -nr | head -n10 | awk '{print $2}'); do

if [ -d "$FOLDER" ]; then

echo $FOLDER >> /Users/$CURRENT_USER/$FILE_NAME

du -m -d -x 0 $FOLDER/* | sort -nr | head -n5 >> /Users/$CURRENT_USER/$FILE_NAME

else

du -m -d -x 0 $FOLDER | head -n1 >> /Users/$CURRENT_USER/$FILE_NAME

fi

echo >> /Users/$CURRENT_USER/$FILE_NAME

done

fi

if [ -f "/Users/$CURRENT_USER/$FILE_NAME" ]; then

su - $CURRENT_USER -c "open -a safari /Users/$CURRENT_USER/$FILE_NAME"

fi

Appologies for all the CAPS and tight coding, like I said, I wrote it a long time ago :)

@Look Thank you for the response. I am a scripting newbie but this is great. I will see if I can adjust the script to pass the data either to another location or Jamf Pro.

I think you can do all of this within the JSS with an extension attribute. This will be a great way for you to get comfortable with EAs and also custom inventory reports. Extension attributes are really fun (Did I just say that?) because you can turn any script output into an value in that computer's inventory.

More info on Extension Attributes in the Casper Suite Administrator's Guide.

First here's the extension attribute, notice for simplicity sake we assume there's only one user on each computer and that they're always logged in. (We have laptops so this is the case for us) You can build upon this to iterate though all users folders if there are more than one, and/or not have to rely on someone being logged on.

#!/bin/sh

# Modified from CheckDownloadsFolder.sh

# Check and report via EA size of user's home folder

# github.com/acodega

#

#find logged in user

loggedinuser=`python -c 'from SystemConfiguration import SCDynamicStoreCopyConsoleUser; import sys; username = (SCDynamicStoreCopyConsoleUser(None, None, None) or [None])[0]; username = [username,""][username in [u"loginwindow", None, u""]]; sys.stdout.write(username + "

");'`

#find how large User's folder is is

homefolder=`du -hd 1 /Users/$loggedinuser/ | awk 'END{print $1}'`

#echo it for EA

echo "<result>$homefolder</result>"

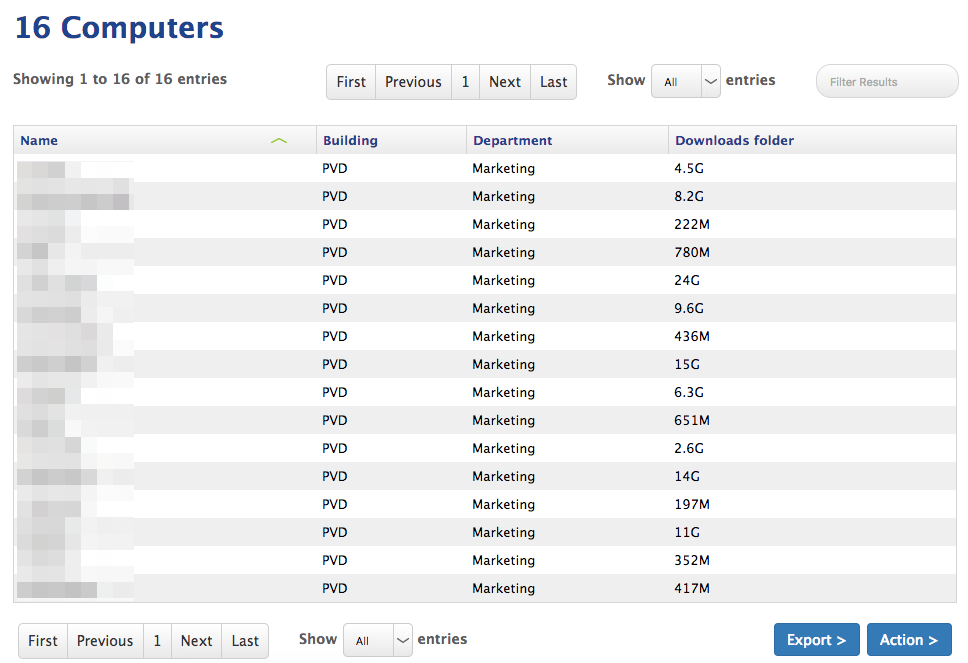

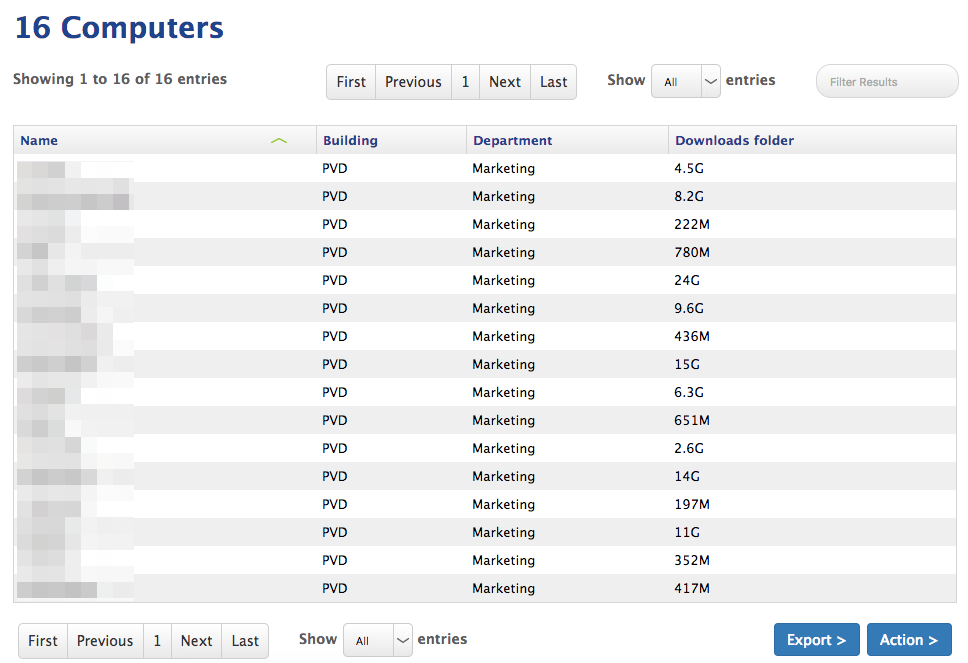

Once we have the extension attribute configured on the JSS we can then goto Computers, and under Advanced Computer Search click the plus icon. From there, we'll design a search that has criteria matching the computer group or department we care about. Then under Display, check off the computer name from the Computer tab and the relevant extension attribute under the Extension Attributes tab, along with any other info that would be helpful to have in the report then click Search. You can export the search as a CSV or TXT file too.

Here's an example of what it would look like. (My EA reports their Downloads folder)

@jaguar0620 your welcome, as per @adamcodega the easiest way to get any script result info into the JSS is as an extension attribute.

@jaguar0620 A better way to do this would be to have a policy run once per week to store home folder sizes in a plist file and then read that plist file during recon. The reason for not grabbing the sizes during recon is the amount of time it takes and the stress on the system. I wrote up this exact process over the summer:

Collecting Data Using plist Files

I've had users complain about their machines feeling slow when recon was running so I moved the home folder size scripts to this method. Remember,a nice as Extension Attributes are, they run every time a recon runs and there is no way to selectively turn them off during recon.

We usually have a script run once a day, using a loop outputting home directory sizes to a text file:

#!/bin/sh

User=$( dscl . list /Users UniqueID | awk '$2 > 1000 { print $1 }' )

for u in $User ;

do

results=$( du -sh /Users/$u | awk '{ print $1 }' );

mkdir -p /Library/Company/;

echo $u - $results > /Library/Company/home-sizes.txt

done

Then cat the text file using an EA:

#!/bin/sh

if [ -e /Library/Company/home-sizes.txt ]; then

echo "<result>$( /bin/cat /Library/Company/home-sizes.txt )</result>"

else

echo "<result>FileDoesNotExist</result>"

fi

This way recon doesn't take too long, and EA gets fresh home directory sizes for all users daily, since the text file is overwritten each day.

Don

Agreed @stevewood this was the primary reason we had this as Self Service rather than something we passed back to Casper.

In general in our environment we don't really need to know individual user sizes until machines are really low on space (which we track in casper using the built in EA's).