- Jamf Nation Community

- Products

- Jamf Pro

- Re: 9.100+ Large environment experience?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

9.100+ Large environment experience?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 09-20-2017 02:00 PM

Long story short iOS 10 forced us to upgrade to 9.96 this time last year and I lived in a very, very dark place for several months after. We are now standing at the edge of the upgrade cliff again due to iOS 11. If you are running Jamf Pro 9.100 or 101 and have 30,000+ devices have you had any problems since the upgrade. We currently have 7 total web apps with 6 behind an F5 load balancer. All web apps are running 16G of ram and 4 cores on windows server 2008R2 VM's. A dedicated physical windows 2008R2 MySQL 5.6 server using the MyISAM DB engine and a dedicate memcache server. We are currently running Jamf Pro 9.96.1476461474 supporting 24,500 Macs and 13,500 iOS devices. I don't want to go back to the dark place again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 09-20-2017 03:47 PM

What was the dark place? Performance issues?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 09-20-2017 04:12 PM

We don't have as many Macs as you (1/3), but more iOS devices. 9.100 and 101 have both been fine. 7 total web apps? I get the need for MyISAM on a beefy MySQL box, but 7 web apps seems extreme. We run 1 primary and a 2nd solely for outside access (granted my primary has something like 16 cores and 32GB RAM).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 09-20-2017 04:46 PM

We don't have as many devices as you, but I would still consider our installation fairly large. We were running Jamf Pro 9.99.0 up until about a week ago when we upgraded to 9.101.0. We skipped 9.100.0. So far since upgrading about a week ago all as been fine. The upgrade was super easy. But we run it all under Red Hat. So far all seems good with the JSS and iOS 11 as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 09-20-2017 08:28 PM

It's been a challenge keeping our JSS stable with just 12k Mac. I run into different performance issue that results in service outages at the rate of at least once a month. The solution is usually "upgrade to the latest version" or "have you though about moving to jamf cloud?". :)

I don't know how performance is officially measured by Jamf... We defined performance internally as the JSS's ability to process client connections. This measurement does not really apply to the JSS web UI performance, which has always been "slow" when compared to other admin consoles I use.

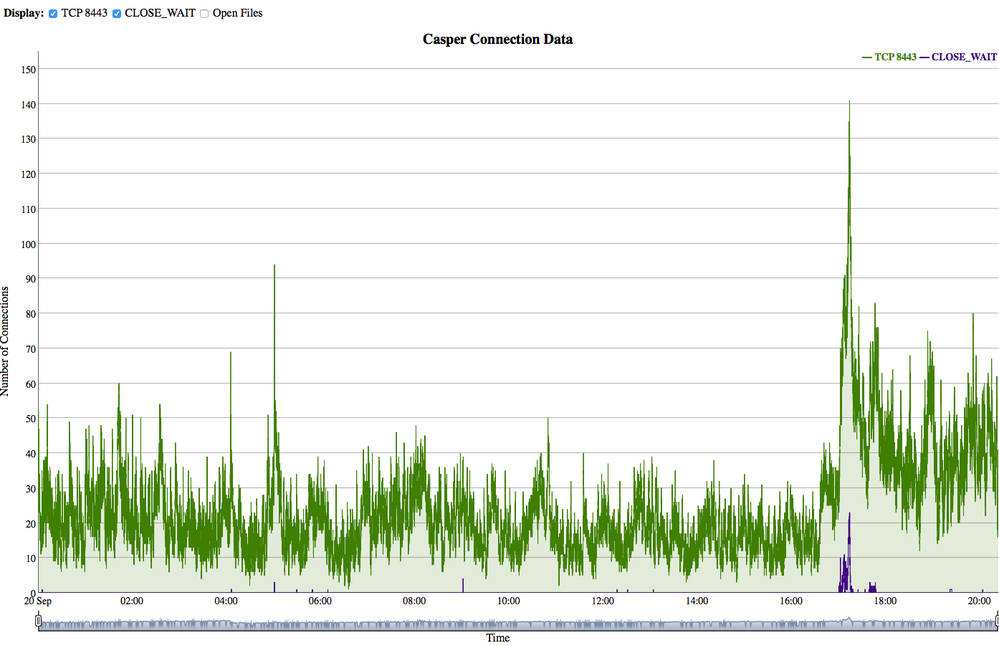

We have an internal dashboard for tracking simultaneous TCP connections to the JSS' client port, open files on the server, and most importantly: the number of TCP connections in a "CLOSED_WAIT" status. We know when we see a sharp spike in CLOSED_WAIT connections, the JSS is struggling to keep up with client requests and a tomcat crash is imminent. We found the host server resources are not taxed and rarely exceeds 50% total resource usage for CPU/RAM/IO. Tomcat crashes out long before the server runs out of resources, so this metric is not tracked in the dashboard.

Our JSS has 2 app servers (DMZ+LAN) on a pair of MacPros, I have a script running on each that outputs stats to a live web dashboard. The script I use it below for reference, the dashboard is using a local implementation of http://dygraphs.com for a live JSS "Heartbeat"

The script also has an option for reloading tomcat if a certain threshold is reached. This has saved me as tomcat will not recover on its own once crashed.

JSS statistics: weekly inventory, hourly checkin, Application usage tracking enabled, 20+ EAs, several config profiles, 400 policies, ~100 self service policies, ~3000 network segments, 1200 buildings, 300 departments, 250 smart groups, 50 licensed app objects, LDAP, etc...

MacPro "server" config: 12core xeon, 32GB RAM, 1TB SSD, 10GB fiber optic network link over TB2.

Normally my JSS runs an average of 150 simultaneous client connection over port 8443. mySQL: ~50% CPU and Java: ~90% CPU 6GB RAM.

At business day start, it not uncommon to see 300-400 simultaneous connections to the JSS. mySQL: ~150% CPU and Java: ~250% CPU 8GB RAM.

During off hours, the JSS has 20-30 simultaneous connections. mySQL: ~10% CPU and Java: ~25% CPU 6GB RAM.

Tomcat starts to destabilize around 1700 connections and hard crashes at 2200 connections. mySQL: ~250% CPU and Java: ~250-900% CPU.

mySQL is on the same server as the master tomcat app. We've found ~25GB of data is passed locally between tomcat and mySQL over the course of a normal business day.

#!/bin/bash

# date, number of TCP connections on port 8443, number of connections in closed_wait, number of open files, hostname

while [ 1 ]; do

sleep 5

closed_wait="$(netstat -f inet -n | grep 8443 | grep CLOSE_ | awk '{print $5}' | wc -l | awk '{print $1}')"

result="$("$(date "+%Y-%m-%d %H:%M:%S"), $(netstat -f inet -n | grep -i 8443 | wc -l | awk '{print $1}'), ${closed_wait}, $(sysctl kern.num_files | awk '{print $2}'),$(hostname)")"

echo "${result}" >> /Library/Logs/JSS/TCPConnectionLogs/Casper_TCP_connecions$(date "+%Y%m%d").log

if [ "${closed_wait}" -ge "1500" ]; then

echo "$(date): CLOSED WAIT COUNT OVER 1500!! RESTARTING TOMCAT" >> /Library/Logs/JSS/kicked.the.cat.log

launchctl unload /Library/LaunchDaemons/com.jamfsoftware.tomcat.plist

sleep 2

launchctl load /Library/LaunchDaemons/com.jamfsoftware.tomcat.plist

fi

done

exit 0- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 09-21-2017 05:53 AM

@wilesd Yes, for about 3 months after upgrading to 9.96 we struggled daily with keeping the JSS up and functioning. We are a school district so as soon as the day would begin and the JSS was getting a lot of traffic MySQL would get overwhelmed and things would grind to a stop. Before and after the school day things worked pretty well. We made a lot of changes and tweaks but ultimately things did not level out until we added two additional web apps.

Thank you everyone for your input. I know that ultimately we will have to upgrade in order to support iOS 11. I am just very hesitant to make any big changes. I have 9.100 in a test environment setup nearly identically to our production. However, there is no way to simulate the load of thousands of devices all talking to the JSS at once.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 09-21-2017 06:36 AM

We have had huge problems since 9.98 with our JSS system. The Master JSS server load will hit anywhere from 20-70 AND STAY THERE. Communication will do down, website is down and I have to bounce the Tomcat service.

We worked with JAMF for 4 months last winter. We updated a few times, updated Java, added a 2nd JSS and clustered. All to no avail. Finally, JAMF discovered a bug deep, deep in their code that was the cause. That helped greatly until we have to upgrade to 9.100 for iOS11 and now the issues are back. JAMF had us switch now to Memcache. Waiting to see if that helps at all today....

We have only 600 Macs and 5800+ iPads too.