- Jamf Nation Community

- Products

- Jamf Pro

- Re: Any installation from Self Service stuck on Ex...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Any installation from Self Service stuck on Executing forever. Is there way to troubleshoot?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 01-29-2018 12:31 PM

As of today, anything I want to install from Self Service is eternally stuck on Executing. It seems to work just fine for everyone else in the company. Is there anything I can do to troubleshoot this? How can I tell what it gets stuck on? Any log anywhere?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 01-29-2018 02:23 PM

The local install log and jamf log may be insightful.

/var/log/install.log

/var/log/jamf.log

If you have access to the Jamf server, you can look at the specific policy log.

E

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-19-2018 07:45 AM

We're seeing this issue as well. Any update or information regarding this @btomic

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-19-2018 08:16 AM

I just started seeing this behavior as well. Policy stuck executing and no evidence in jamf.log or install.log that the policy actually is executing. The same policy executed via another trigger (like recurring check-in) works perfectly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-19-2018 08:44 AM

@rice-mccoy Thanks for the reply. What has been your work-around/fix? A complete removeFramework and re-enrollment? I am currently feeding Jamf Support information and logs on this random issue, but I am not seeing a trend or commonality at this point. Some interesting things observed in the logs would be this:

System.log about every 10 seconds:

Jun 19 14:06:20 <MACHINENAME> com.apple.xpc.launchd[1] (com.jamfsoftware.jamf.agent[13270]): Service exited due to signal: Terminated: 15 sent by killall[13273] Jun 19 14:06:20 <MACHINENAME> com.apple.xpc.launchd[1] (com.jamfsoftware.jamf.agent): Service only ran for 0 seconds. Pushing respawn out by 10 seconds.

jamf.log about the same frequency:

Tue Jun 19 14:06:42 <MACHINENAME> jamf[12915]: Failed to load jamfAgent for user <mgmtaccount> Tue Jun 19 14:06:51 <MACHINENAME> jamf[12915]: Informing the JSS about login for user <userid>

I was able to confirm a MacAppStore policy (VPP policy) worked when invoked from Self Service, but other Policies would just sit there at "executing" as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-19-2018 12:23 PM

@benducklow it has not been wide spread.

I think most cases cleared by force quitting the Jamf processes that were running (JamfAgent, JamfDaemon, jamf) not sure which one finally did it. Like you VPP policies weren't impacted.

If I see it again I will look more closely at the log for any matches. (We are a small shop.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-19-2018 12:36 PM

@rice-mccoy Thanks. By force quitting, were you just doing a kill command with the PID number from Terminal (ex: $ sudo kill <PID>)? If so, I guess we haven't tried that yet. Anything you were doing to (re)start those processes?

Thanks in advance for sharing your experience!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-20-2018 07:55 AM

@benducklow Yes, sudo kill. The jamfdaemon seemed to automatically restart. Self Service opened back up and the policy immediately started (visual was executing > Downloading > Installing) and Jamf.log showed the regular alerting which wasn't present at all prior to sudo kill.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 07-11-2018 09:19 AM

I'm having this issue too. Currently working with Jamf support too. Will let you know if we find a resolution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 07-17-2018 09:21 AM

This issue just popped up for us. Trying to troubleshoot it. Curious if anybody has figured out a solid solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 07-17-2018 09:38 AM

@churcht No solid solution, but it has been identified with PI-005944. One thing that has 'kind of' worked (although not 100% according to feedback I have received) is to run the following against a machine:

#!/bin/bash # This script must be run as root or with "sudo" echo "Stopping the jamfAgent and removing it from launchd..." /bin/launchctl bootout gui/$(/usr/bin/stat -f %u /dev/console)/'com.jamfsoftware.jamf.agent' sleep 1 /bin/rm /Library/LaunchAgents/com.jamfsoftware.jamf.agent.plist echo "Running jamf manage to download and restart the jamfAgent..." /usr/local/jamf/bin/jamf manage

We've been doing is via Jamf Remote on a case by case basis with mixed success results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 11-07-2018 11:53 AM

I'm experiencing the same effects on some but not all machines. Just stuck and repeatedly informing JSS of a user login. Tried killing it and restarting the machine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 12-16-2018 10:58 PM

Anyone having any luck with this? I'm seeing this with increasing frequency, so it's becoming a real problem.

The logs don't give much - the selfservice_debug.log shows

Binary request: triggerPolicybut then nothing after that, and the Self Service item will just remain on "executing" forever. On a Mac that works fine, it's followed immediately with

Message received from the jamf daemon : {

...

...I've tried manually killing the processes as mentioned above, but it makes no difference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 01-02-2019 09:59 PM

Hi all,

I'm doing some more testing, and I think my issue is related to how I do invitation based enrolments (I have a script that uses an invitation code that doesn't expire). If I run "jamf removeFramework" and then re-enrol via a user-initiated enrolment (either via MDM profile, or QuickAdd), my Self Service works as intended.

I'm unable to have DEP in my current environment, so this is my only other option for now.

I've still got a lot more testing to do, but if someone else who is having this issue could give it a go, it'd be interesting to see if it fixes it for you as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-15-2019 09:02 AM

@benducklow I set up your script to be run on a trigger. So if anyone on our team has issues we can just do sudo jamf policy -trigger fixselfservice and it's been working almost all the time so far. Thanks so much.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-21-2019 12:34 PM

Experiencing this issue as well, more recently, and killing the process doesn't seem to resolve it for us consistently. We are opening a ticket - will update if we get any more info.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-21-2019 06:09 PM

I’ve had this issue for a few weeks and I asked around in my Jamf 300 class and no one knew anything about it. I’ve removed framework but same issue.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-29-2019 10:16 AM

Hey all, on JAMF 10.11.1 here and I just saw this bug for the first time. Running the script @benducklow provided fixed the problem on the machine.

This was on a DEP machine as well, so it looks like the type of enrollment doesn't make much difference on whether or not you see this bug. Hope this doesn't become widespread, we have about 100 machines to get done and it's already taking more time with the "fun" that is DEP and not being able to image!

Update: We've gotten about five or six out, and only one has NOT needed the fix. Terrific, MORE steps to our provisioning process! Also, one laptop didn't wanna cooperate, I did a jamf policy in the terminal, and it mentioned it was upgrading the jamf helper. On a new install? But then it worked! Bizarre. Oh well, at least there is a fix. :/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-29-2019 12:57 PM

Yeah this issue seems to have crept up again with the update to v10.11.1. The 'fix' was not working though. it may/may not have been due to a large cache deployment (Mojave install package ~6GB) that was happening, but support saw a rash of them come in in the following days after the upgrade..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-29-2019 01:08 PM

I had a 10.13 Mac do this today. RemoveFrameWork and re-enroll didn't fix it the first time, so I rebooted after the RFW command and then enrolled and that worked. I have a ticket open on this with JAMF and they wanted a bunch of logs and stuff, which I gathered before "fixing" it.

I guess we will see what they see. It seems pretty random and the fix seems equally random. Some I've been able to just run the manage command, others have been full on re-enrollment.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-29-2019 01:13 PM

Keep us posted @dpodgors and well as other following on this thread. I thought we had this identified to things happening in the background (ie; software download/cache happening), but that doesnt seem to be the case 100% of the time...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-29-2019 02:15 PM

Yeah I was wondering if having Self Service deploy at Enrollment Complete was messing something up so I turned that off and set to install at checkin only, no change. Luckily Ben's script has fixed this in all cases for us, no restart needed so far. For those of you who've encountered this, does it stay fixed usually, or might it break again? I guess at least the jamf binary seems to be unaffected, so there are ways around it if it does. Still annoying though!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-29-2019 03:28 PM

We're also getting reports of users unable to run policies through Self Service and getting stuck at "executing..." removeframework and re-enrolling did not help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-30-2019 06:57 AM

Just adding a +1 here. I'm seeing it too. Waiting to hear back if the recommended script above worked to remedy it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-31-2019 08:26 AM

Possibly unrelated but any script or installer that prompts for input (ex: jamfHelper) can hang the jamf daemon which also processes self-service requests. We saw this all the time due to poor admin scripting practices. To combat that and future admin error, I've deployed a monitor which watches for jamf daemon sub-processes and if it has been running longer than X seconds (say 6 hours), it gives it a quick kill.

In a pinch, this allows us to at least keep machines reporting until we identify the policy that caused the issue. Welcome are any improvements, like identifying jamf daemon children better.

#!/bin/bash

# jamfalive.sh

# Daemonize and call on interval to keep jamf alive

# Example: /Library/LaunchDaemons/com.company.jamfalive.plist

# Time to Live - adjust for your environment

ttl=21600 # 6 Hours

# ttl=14400 # 4 Hours

# ttl=10800 # 3 Hours

# Some general health checks without touching JSS

chmod +rx /usr/local/jamf/bin/jamf

chmod 6755 /usr/local/jamf/bin/jamfAgent

rm /usr/local/bin/jamf; ln -s /usr/local/jamf/bin/jamf /usr/local/bin/jamf

if ! launchctl list|grep -q jamf.daemon; then launchctl load -w /Library/LaunchDaemons/com.jamfsoftware.jamf.daemon.plist; fi

# Exclude launchDaemon monitor PID

JAMF_DAEMON_PID=$(pgrep -f "jamf launchDaemon")

# PIDS to age and kill if hung.

# pgrep -x is for process name exact match

JAMF_BINARY_PIDS=($(pgrep -x "jamf"))

JAMF_HELPER_PIDS=($(pgrep -x "jamfHelper"))

PID_LIST=( "${JAMF_BINARY_PIDS[@]}" "${JAMF_HELPER_PIDS[@]}" )

for P in ${PID_LIST[@]}

do

# skip the demon process

if [ "$P" == "$JAMF_DAEMON_PID" ]; then continue; fi

# Check the age of process

now=$(date "+%s") # epoch of right now

max_age=$(( now - ttl ))

start_time_str=$(ps -o lstart= $P|sed -e 's/[[:space:]]*$//') # date is provided in textual format from PS

start_time=$(date -j -f "%c" "$start_time_str" "+%s") # convert date to epoch seconds

# Kill all processes older than ttl seconds: $max_age

if [ $start_time -lt $max_age ]

then

pname=$(basename "`ps -o comm= -p $P`")

pfull=$(ps -p $P -o command=)

# Log the action - output location specificed in launchd definition

echo "$(date "+%a %b %d %T") $(hostname -s) jamfalive - PID: $P ($pname) $start_time_str"

echo "$(date "+%a %b %d %T") $(hostname -s) jamfalive - $pfull"

# Kill the process

kill -9 $P

fi

done- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-31-2019 09:16 AM

I found a solution this morning that worked for me.

Problem: Self Service apps stuck at “Executing”.

Solution: Download a new Self Service app from the JSS Self Service Settings and replace the old versions. Here’s why:

During original testing, I was using a 2013 Mac Pro and a Late 2013 iMac and Self Service apps all installed properly. It wasn’t until I tried Self Service on my MacBook Pro (13-inch, 2016, 4 Thunderbolt 3 Ports) that the problem showed up. I then set up 3 MacBook Pro’s (Retina, 13-inch, Early 2015) and had the same problem. I initially thought that it was something different in the newer Macs vs the 2013 models. But what?

My next thought was that the problem Macs were all using macOS 10.14.5 and the JSS Mac was on 10.13. and JSS: 10.11.1. So I updated the JAMF Server (Mac Pro 2013) to 10.14.5 and JSS 10.12.0. This did not solve the problem.

Finally, I thought about everything that had been changed, and asked “what hasn’t changed?” I immediately though of the Self Service app itself: the one that was being used had been Downloaded from the JSS before the server updates. I downloaded a new copy, installed it on the problem Macs, and the Self Service apps installed.

I'm very happy now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 05-31-2019 09:46 AM

We've had mixed results reinstalling Selfservice. Get a ticket opened with your SME and ask to be attached to SUSHELP-1117. It is an internal ticket number with their sustaining engineering team.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-03-2019 05:10 AM

rsterner

Unfortunate for us we are cloud based and have experienced this across all models of Mac's.

I sent off all the logs they wanted and got the Mac back up and running. This time it required a removeframework and re-enroll.

They came back with "we need more logs" so I'll have to wait for another hanging SS.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-05-2019 08:31 AM

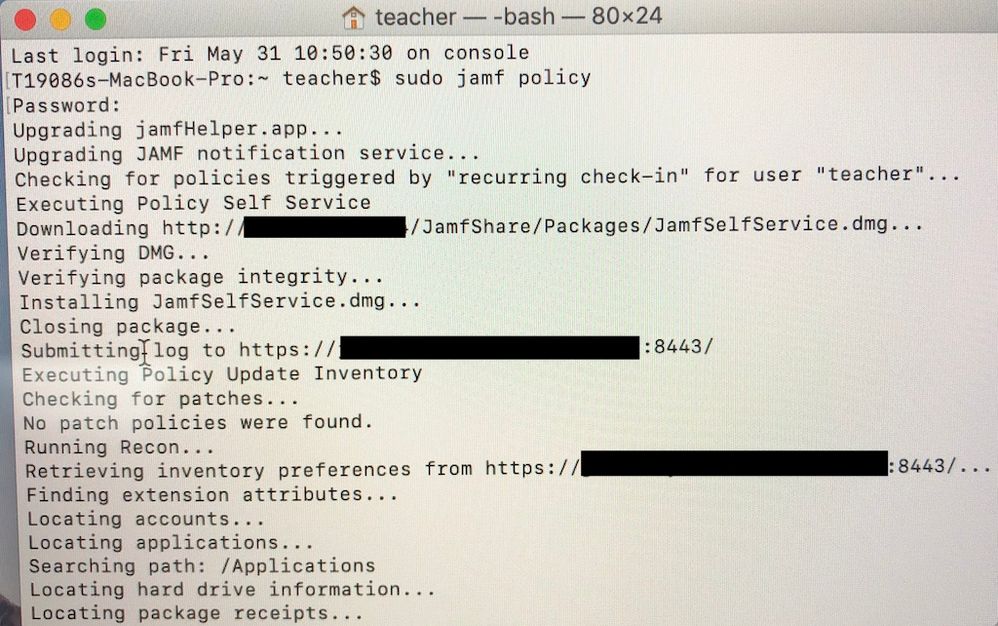

I just wanted to add something I'm seeing with machines with this issue. When we do an initial "jamf policy" to get Self Service to come down (because Enrollment Complete trigger is still super unreliable, sigh), I get the "upgrading jamfHelper.app" and "upgrading JAMF Notification Service" as seen in this screenshot. I find the messages odd as these are fresh out of the box Macs. Just thought I'd share these in case JAMF is watching this thread. Sadly I am dealing with too many dumpster files right now to open a case, and it seems quite a few already have anyway.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-06-2019 01:48 PM

We've seen this same issue on a few machines recently too. No additional information to add, just adding an incident report :/

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-13-2019 06:54 AM

Hey all, waiting on a fix/explanation for this as well.. Tried to simply install Chrome for a user from Self Service and it hung at Executing for many minutes.. ended up installing manually.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-13-2019 07:04 AM

I called back in an (re)opened my old case regarding this issue and like @stuart.harrop suggest, I got linked with SUSHELP-111. So far the response from support is they are looking into it more. In the meantime I keep feeding them the jamf.log/install.log/system.log as I get them from our support team. It seems at this point (we're on v10.11.1) that a removal of the framework and re-enrollment is the only workaround :(

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 06-18-2019 07:44 AM

Received a note from our SME. They are looking to implement a fix for Jamf v10.14

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 07-11-2019 02:51 AM

We also see this issue since about 1 week. (randomly and only a few devices)

One issue could be fixed with removeFramework and re-enroll. Another one could not be fixed with re-enrollement.

btw...issue is known for more than 1 1/2 years and not fixed by JAMF...sad...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 07-11-2019 10:21 AM

I agree. JAMF and Apple both need to go on a bug squashing spree. Stop adding things, stop changing interfaces, stop making things shiny and just focus on all these little nitty gritty's that are starting to add up to significant frustration. [/soapbox]

Also, we finished our DEP setup and had to do the fix script on I would say 70% of them. Now we're doing some older computers and haven't had one do it so far. Really bizarre.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 08-06-2019 06:59 AM

Yeah I've recently upgraded our Jamf on-prem to 10.13, the upgrade was successful but now we're seeing this issue. As this is a known issue for over a year, can we get someone from Jamf to confirm they're looking into this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 08-06-2019 08:10 AM

I too had this issue when we upgraded to Jamf Pro 10.14. In my case it was a really offbeat issue that hadn’t happened since we put in Jamf Pro. Policies would from terminal but not from Self Service. I Figured out that the new self service didn’t like spaces in the distribution point share name. Once I figured that out it was an easy fix. Don’t know if that necessarily applies to you, but it did afflict us.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 08-06-2019 08:29 AM

@blackholemac Interesting discovery I guess with the spaces in the Distribution point name. Could you elaborate on that a bit? Not sure if it's a coincidence or what. We don't have any spaces in our DP share name and still see it from time to time; mostly right after we did the last update to 10.11.1 (which we're still on for the time being). Wonder if people are still seeing it with 10.14.0??

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 08-06-2019 08:52 AM

We have always had a space in our DP name is the funny thing. I even moved that convention over to our new JSS a few years back. (That part is a long story for another time.) Anyway, all was working hunky-dory until we upgraded before school started. I noticed my self service policies kept erroring out. My push policies were working fine and and if I ran Self Service policies from command line (sudo jamf policy -id <your policy number here>) it would work fine. Only failed in self service. Logs showed that it couldn’t mount the distribution point. I could mount it properly from “Connect to Server” as well. After agonizing over that for a long time trying to figure out why, I tried trying different shares and it still failed. At That point I called Jamf... while waiting on an expert, I tried changing the share point name as a last straw. For the record, Jamf said to try the same thing 10 minutes after I tried that. That was the answer. I’m Lucky in that I am not married to that share point name. I know when building new on prem installs in the future NOT to include a space in the smb distribution point name ever again!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Posted on 08-06-2019 10:55 AM

Same issue here. Everything I run from self-service is hung on executing. 10.14 cloud instance. They do run from command line as mentioned above. I'm using Cloud Distribution point. Is there anything else I can try?