I'm tinkering with web scraping in bash, and I'm getting a result, but it's inelegant and, I suspect, unreliable.

What I'd love to learn how to do is to take the Xth table from a given web page, and place the contents of its rows and cells into a csv file.

Once that's done, I'd want to normalise some data, for example, dumping formatting tags, stripping 'a' tags but retaining the URL, and stripping 'img' tags but retaining the URL to the image.

I also want to do this on a clean macOS system, using only the built-in tools.

I could probably get there if I kept beating on it, but I'm reaching out to the great gestalt brain of the mighty Jamf Nation to see if anyone has already done this. Any suggestions are welcome.

Below is the example I am working with. I do not want to focus on the peculiarities of this particular web page, but rather the generic and portable approach described above.

I'm using this...

curl "https://macadmins.software" | sed -n '/Volume License/,$p' | sed -n '/<table*/,/</table>/p' | sed '/</table>/q' | awk '/Standalone|AutoUpdate/' | awk '!/OneDrive/' | awk '{gsub("<tr>|</tr>|<td align="center">|<td>|<a href=[:47]", "");print}' | awk '{gsub("</td>", ",");print}' | cut -f1 -d"'"To get this CSV...

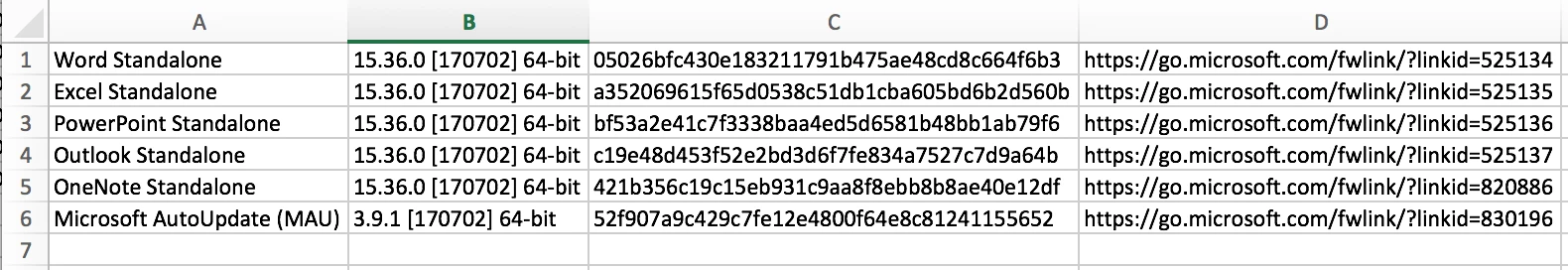

Word Standalone,15.36.0 [170702] 64-bit,05026bfc430e183211791b475ae48cd8c664f6b3,https://go.microsoft.com/fwlink/?linkid=525134

Excel Standalone,15.36.0 [170702] 64-bit,a352069615f65d0538c51db1cba605bd6b2d560b,https://go.microsoft.com/fwlink/?linkid=525135

PowerPoint Standalone,15.36.0 [170702] 64-bit,bf53a2e41c7f3338baa4ed5d6581b48bb1ab79f6,https://go.microsoft.com/fwlink/?linkid=525136

Outlook Standalone,15.36.0 [170702] 64-bit,c19e48d453f52e2bd3d6f7fe834a7527c7d9a64b,https://go.microsoft.com/fwlink/?linkid=525137

OneNote Standalone,15.36.0 [170702] 64-bit,421b356c19c15eb931c9aa8f8ebb8b8ae40e12df,https://go.microsoft.com/fwlink/?linkid=820886

Microsoft AutoUpdate (MAU),3.9.1 [170702] 64-bit,52f907a9c429c7fe12e4800f64e8c81241155652,https://go.microsoft.com/fwlink/?linkid=830196From this page...

https://macadmins.software