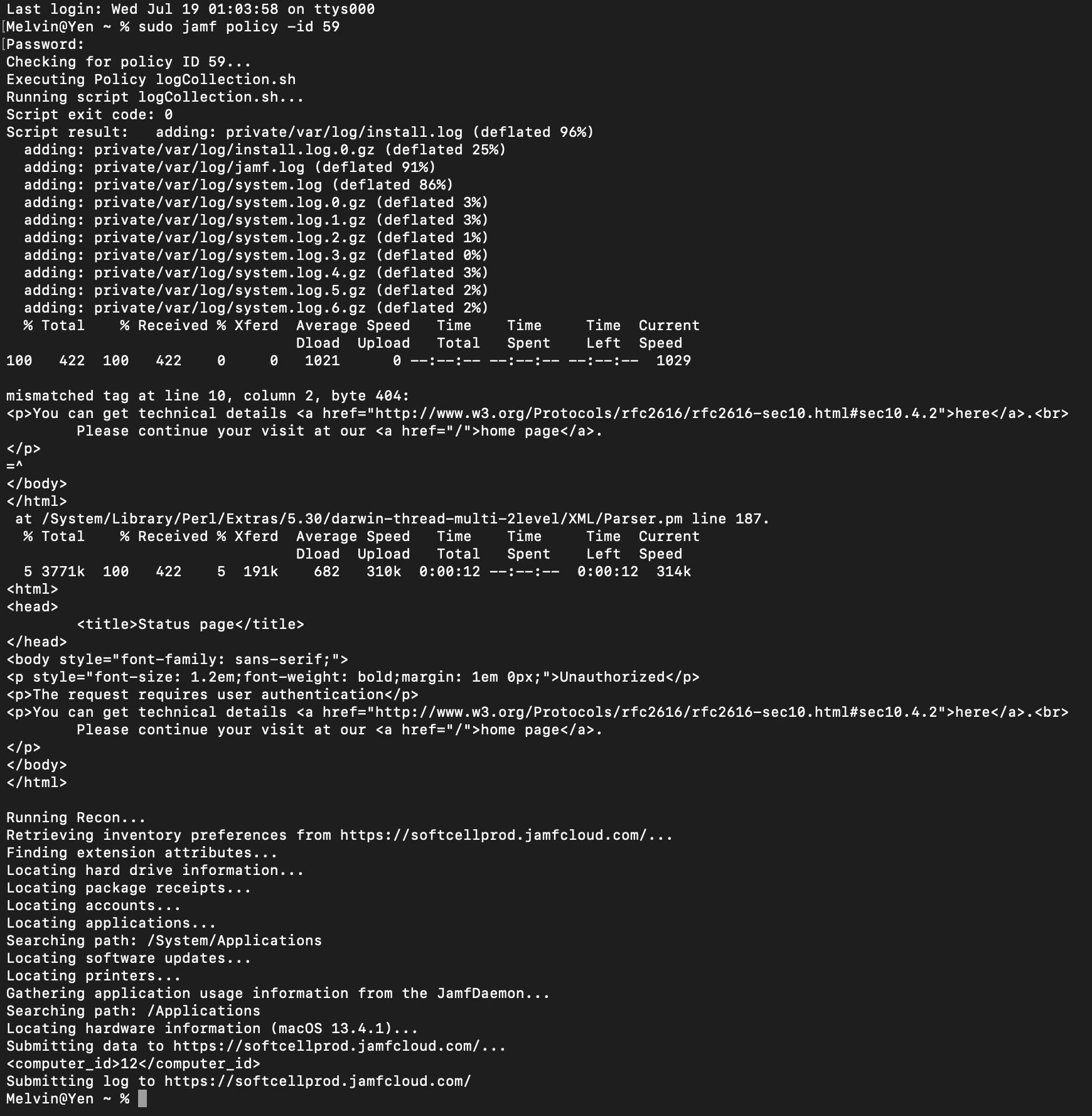

What methods are people using to get logs off of users systems? For example, a user will call in saying they have some issue with their system. We'll generally then take a look at system.log, install.log, jamf.log, etc. by remoting into their system, having them email us a copy, or looking at them directly.

I'm thinking it would be much better if I could script a Self Service item that would upload those files to an available share, pull them with Casper Remote (without needing to Screen Share), or something similar. Has anyone done anything like this?