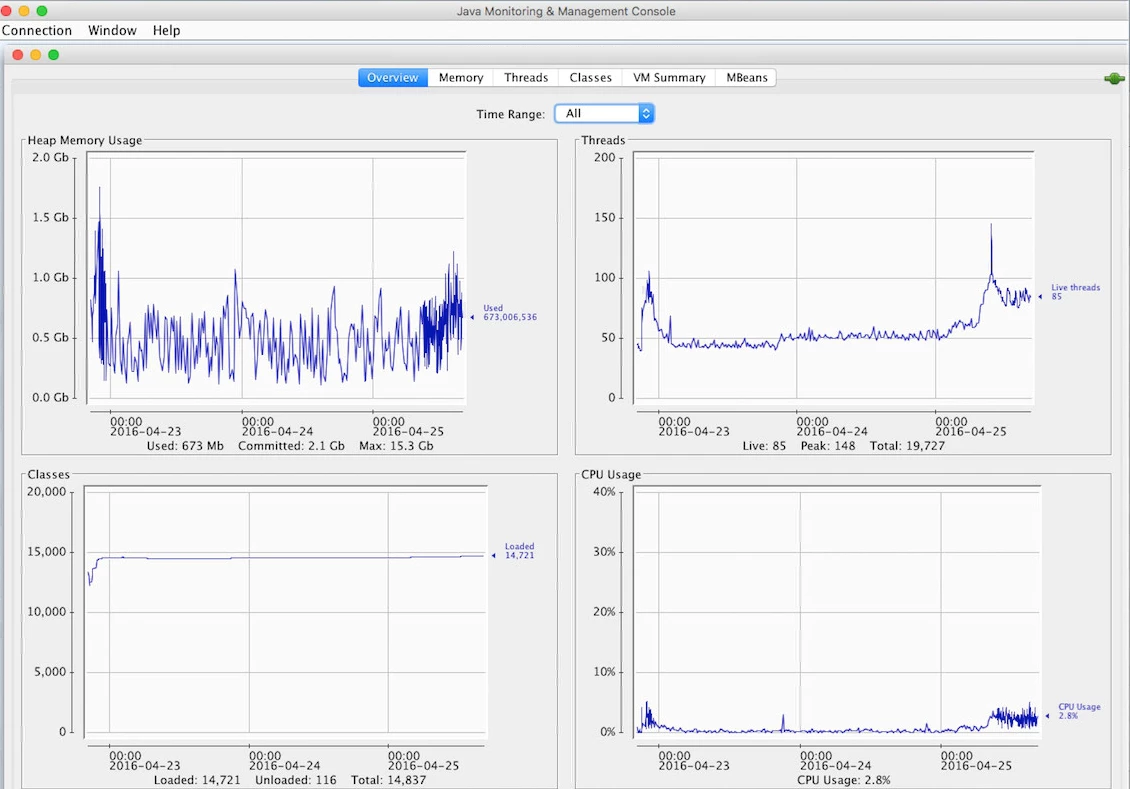

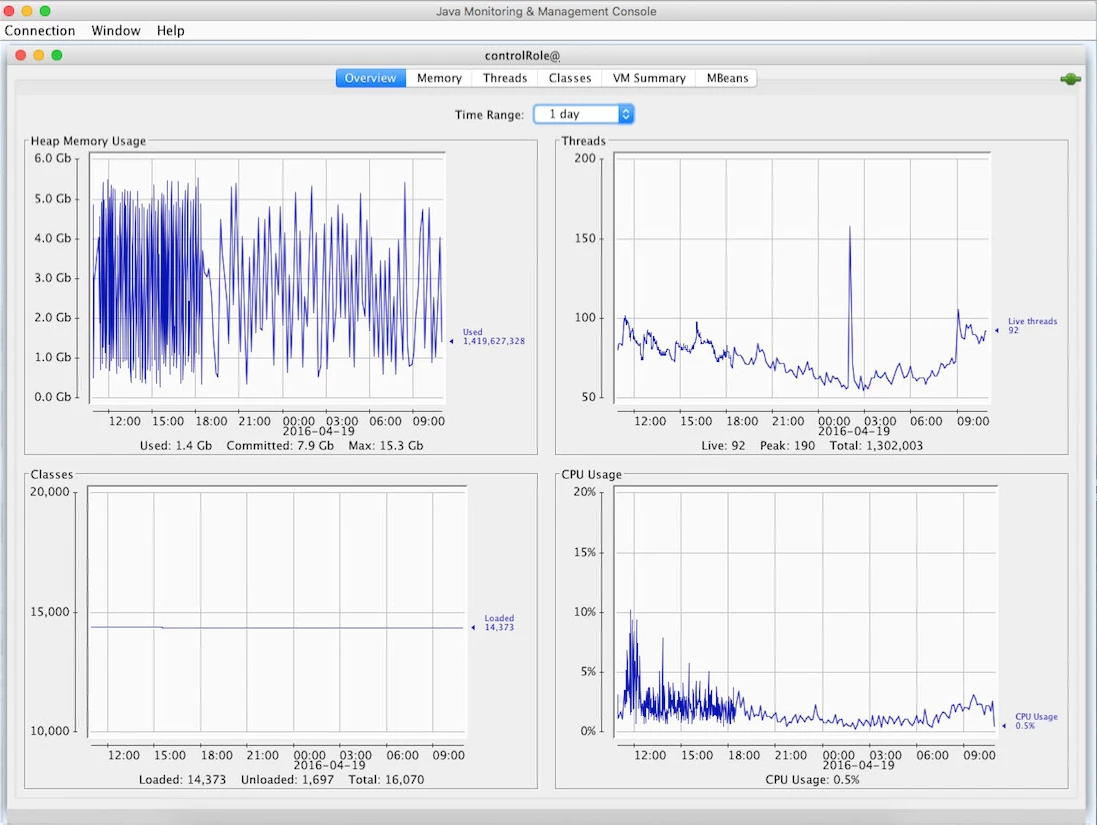

We have an issue where periodically (2-3x a month?) Tomcat will simply freeze up. The service is running but it stops responding. Restarting fixes this 100% of the time, so it's not impacting us greatly but I'm curious if anyone has seen something like this.

We're running v.9.92 on a physical server running RHEL 6.8 (Santiago) - server has plenty of memory (64gb) and storage (6 tb, less than 10% used currently).